I gave a talk at UX Australia 2016 in Melbourne (August 25–26) — Design is as good, or as flawed, as the people who make it . Slides are available http://www.uxaustralia.com.au/conferences/uxaustralia-2016/presentation/design-people/and audio is available below.

No one sets out to intentionally design a system that is hard to use for — or worse, excludes or discriminates against — some users. Designers are trying their best. You’re probably a good person, but a human nonetheless, therefore not perfect. Design can only be as good as the people who make it. Conversely, design is as flawed as the people who make it.

You are (probably) awesome (yes, there is a but coming)…But you are definitely biased. Me too. We all are.

A father and his son are involved in a horrific car crash and the man died at the scene. But when the child arrived at the hospital and was rushed into the operating theatre, the surgeon pulled away and said: “I can’t operate on this boy, he’s my son”.

How can this be? Do you know?

Of course, the surgeon is the boy’s mother. But how long did it take you to figure that out? If you didn’t come up with this answer, don’t worry you are not alone. You might even have come up with “the boy has 2 dads” — it seems our biases have caught up with same sex couples, but women still can’t be doctors.

This doesn’t mean that you actively think women cant be doctors, or that you’re sexist, this simply illustrates an unconscious bias that you probably weren’t aware of.

Unconscious (implicit) Bias

Unconscious bias refers to a bias that we are unaware of, and which happens outside of our control subconciously. It is a bias that happens automatically and is triggered by our brain making quick judgments and assessments of people and situations, influenced by our background, cultural environment and personal experiences. These are mental shortcuts that your brain takes.

You’re faced with around 11 million pieces of information at any given moment and your brain can only process about 40 of those bits of information. So it creates these shortcuts and uses past knowledge to make assumptions.

System 1 & System 2 Thinking

Psychologist David Kahneman describes this as system 1 and system 2 thinking.

System 1 thinking is:

- Quick

- Automatic decisions

- Effortless

- Impulsive

- Generally stereotypical

On the other hand, System 2 thinking requires:

- Careful attention

- Focus

- Effort

- Reasoning

System 2 takes energy that the brain wants to conserve.

You hear a rustling in the trees. Your unconscious bias is great at using past experience to think maybe this is a tiger, and put you into fight or flight mode. This is rational as it’s better to be safe than sorry.

It’s the same reason you might duck or try and hide around the corner while playing a video game — your brain sees something coming at your and moves your body before you are consciously aware of it. You know it’s irrational to duck from the bullets of Time Crisis on PS1, or whatever updated reference I should be using, but your brain has learned from previous experience to get your body out of danger.

When you think you should turn around or cross the street when you come in to contact with this person, that’s probably OK.

But what this person looks like is playing in your unconscious biases. For example, when my partner shaves his head he notices people act differently around him.

Our biases are different — a recent NPR podcast told a story of 2 women faced with a group of men on the street who were predominately of African American appearance. The caucasian woman made a remark that they should maybe cross the street, while the African American woman was surprised as she actually felt safer. They would come to her rescue if something went wrong, they were her people. This is affinity bias.

Some types of bias

Affinity Bias

Affinity bias is that sense of familiarity with someone who has things in common with you. You instantly like them, they’re like you. Your mind generates justifications as to why you should like them.

Confirmation Bias

Another bias is confirmation bias. This is the tendency to seek information that confirms our pre-existing beliefs and assumptions. It’s also the tendency to see a member of a certain group conform to a stereotype and take it as confirmation that it is true, rather than objectively see facts. I know I’ve heard people see a driver who cut them off is of Asian appearance and say “See, Asian’s cant drive” rather than the obvious truth that not all Asian’s are bad drivers, and many driver’s who are bad are non-Asian. This is also true for so called “positive” stereotypes — black guys are good at basketball, African’s are good runners etc.

The availability heuristic also feeds in to this — the easier it is for you to think of an example of something, the likelier you are to believe it happens more frequently.

Perception Bias

These stereotypes and assumptions feed in to the perception bias — once you have them it is difficult to make an objective judgment about members of that group.

Rabbit or duck?

What is this a picture of? Is this a rabbit or a duck? Once you see it one way, it can’t be unseen. On first glance you see one or the other, depending on your frame of reference You have no reason to consider that you might be seeing something different than others see. We trust our senses to be true.

Unconscious biases in practice

These biases start to become a problem when they feed into hiring decisions and design decisions. Unconscious biases in practice lead to things like

The gender gap in our industry

- Studies showing average resumes with “typically white” named candidates receive more interviews than than highly skilled “typically black” named resumes

- Heidi and Howard identical case studies receiving different performance reviews based on the name. Heidi is not liked, she is aggressive. While Howard is assertive, he’s showing leadership which is expected from a man

- The fact that 60% of US corporate CEOs are taller than 6 foot tall while in the general population less than 15% are this tall. They’re also disproportionally white males. There are likely several unconscious biases at play here.

In the absence of detailed information, we all work from assumptions about who the user is, what he or she does, and what type of system would meet his or her needs. Following these assumptions, we tend to design for ourselves, not for other people.

— Richard Rubenstein & Harry Hersh

Apple’s homogenous board

This is most of Apple’s board, very homogenous, and let’s face it a lot of tech companies share the same lact of diversity. When we design products for ourselves we end up with what I like to call Male first design. White first design and able first design.

Male first design

Crash Test Dummies

When safety regulations introduced in the 1960s regulators wanted to require the use of two crash test dummies, a 95 percentile male and a 5 percentile female meaning that only 5% of men were larger than and 5% of women were smaller than the crash test dummies. Automakers pushed back on regulators until the requirement was reduced to a single crash test dummy, a 50 percentile male (the average man). Due to this female drivers are 47% more likely to be seriously injured in a car crash. Children too. This is started to shift but female crash test dummies weren’t required until 2011. This makes me question my ’99 Ford.

Office Temperature

Algorithms that determine optimal office temperature were designed in the 1960’s when everyone in the office was a 70KG man. Us women, with our lower muscle mass, naturally feel colder than men making us cold in the office.

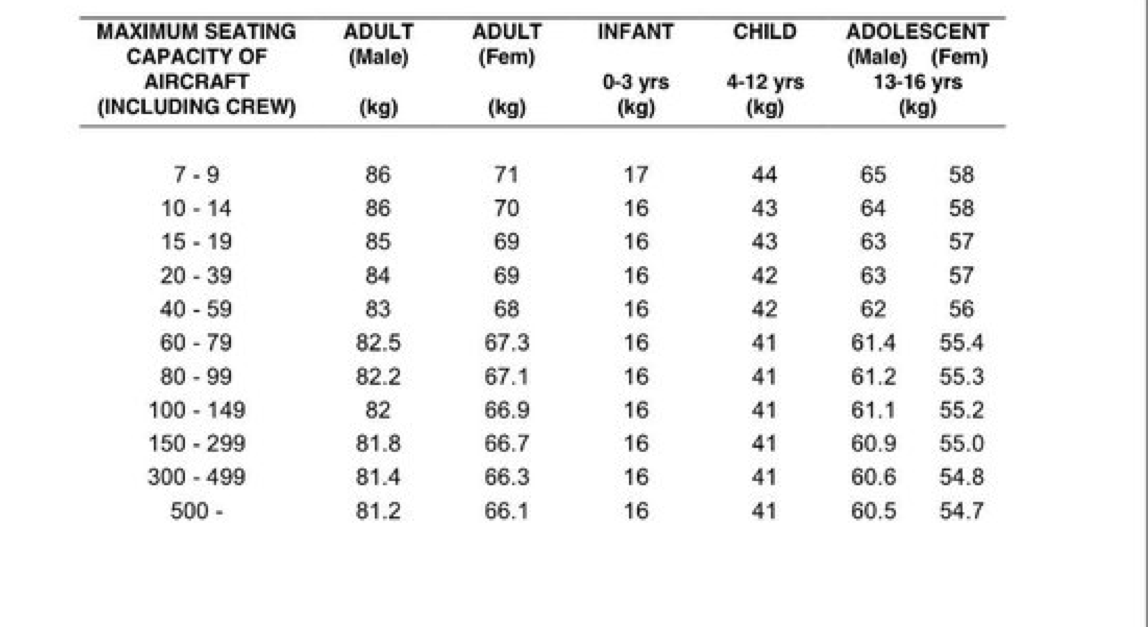

Airplane Weight Assumptions

If you’ve booked a flight online, you’ve probably noticed that you’re asked for your gender. But did you know that this is used to calculate the capacity that the aircraft can carry? And it’s based on average weights from a 1970’s study from the Civil Aviation Safety Authority.

Airplane weight assumptions

I don’t know about you, but I weigh more than 66kg which makes me more than a little concerned. If this is the difference between the plane going down or not, I will gladly tell you my honest weight.

IconSpeak

On a lighter note, this t-shirt IconSpeak is designed to help you communicate in foreign countries by pointing to an icon for what you want. But on a woman these icons need to me point awkwardly at my chest.

Or I could just be respectful and learn a few words in the language of the country I am visit. Hello. Goodbye. Toilets. Bar

White first design

We’ve seen male based design, we’ve also got white first design.

Film

When film was created, as in physical photographic film strips made of plastic and chemicals, which some of you are way too young to remember, they used layers of chemicals which are sensitive to different lightwaves and chemical solutions to develop the negatives into photographs with the correct colour balance.

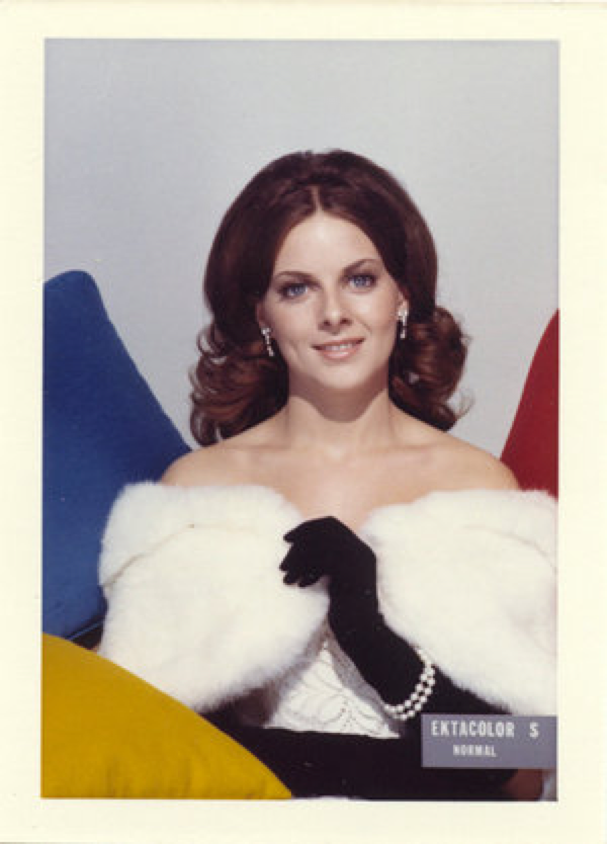

The Shirley card

The Shirley card was used to determine the accuracy of colours — to color match Shirley’s skin tone was to achieve a “normal” color balance, a setting that was applied to everyone’s film, regardless of skin color.

This meant that darker skin tones were out of sync and mixed raced individuals, or photos with people of different skin tones looked bad. We still have nailed this with digital cameras and the HP smart media computers camera was meant to follow faces around, however it only worked on lighter skin — it could not detect darker faces.

By the 1970’s Kodak was getting some push back about not rendering colours correctly. They were forced to make film, Gold Max, that could distinguish colors, but not in response to this racial divide, but because furniture manufactures couldn’t render the difference between different wood stains and chocolate advertisers couldn’t showcase dark and milk chocolate as separate products.

Skin Colour

Unfortunately white is still default for many lines of products.

Skin colour stockings. Nude concealer. ‘Skin colour’ pencils.

My 4 year old, half-Indian beautifully caramel, neice was colouring in and I asked her what colour she was using. She said “Skin colour”. Then she paused, thought for a moment and said “your skin colour, not mine”.

Biases in Tech products

People are biased so design cannot be neutral. How technology is applied is inherently biased.

Apple Watch

When the Apple watch first came out it told you to stand up every hour. Imagine been in a wheelchair, and getting that notification over and over again. Every hour.

Stand up for a minute.

Another example is t the heart rate reader does not work consistently or accurately on people with dark tattoos or darker skin.

Women in release 2

Women have been counting cycles their cycles for decades. Centuries, maybe millennia? Stars or fake names drawn on calendars to mark the upcoming event.

There are hundreds period trackers in the app store. Normally when an app does so successful Apple incorporates it into their own iOS — think calculator, flashlight etc.

But when Apple built an app to see “your whole health picture” and “all of your metrics that your most interested” they missed a basic health feature for half the world. A company where just 22% of technical roles are filled by women neglected to include mensuration, a barometer for a women’s health.

By iOS9 they’d remembered to include this.

Following in the trend of women release 2 — Mickey Mouse watch faces were available in Watch OS 1 but minnie had to wait until Watch OS 2.

It’s not just apple

When YouTube launched their video upload app for iOS, between 5 and 10 percent of videos uploaded by users were upside-down. Why? It was designed for right-handed team, but phones are usually rotated 180 degrees when held in left hands. They created an app that worked best the exclusively right-handed development team forgetting about the 10% of the population that are left handed.

Other tech examples

I’ve said it before — women can be doctors. Yet someone sat down and wrote a line of code that said if sex = female and title = Dr throw an error

Dr Selby’s gym card would not give her access to the female change rooms as anyone using the title Dr was automatically categorised as male. Cambridge gym responded “Unfortunately we have found a bug in our membership management system which is causing the issue.”

This isn’t a bug. This is a line of code someone has written.

Similarly e-tax back in 2010 needed a confirmation which was prompted when you entered a spouse of the same sex.

I’ve found yet another example of name validation with minimum characters. Sorry Joe, time to find a new childhood best friend.

I just like to think about the conversation behind developing this — What’s the shortest name? Hmmm I can’t think of anything shorter than 4? Lets make it 3.

TypeForm gives you the options to allow a user to specify their own gender if they select other. Awesome. Wait… what does that copy say?

Allow user to select HIS gender. Oh, so close. Well intentioned but bias has slipped in to the copy without realising, like the welll-meaning redneck.

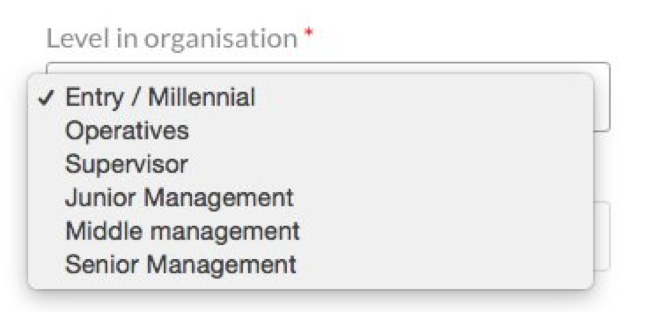

Millennials get a bad wrap and it’s pretty obvious some people have more than an unconscious bias in the media. This form assumes entry level is synonymous with millennial. People can be entry level at any age with a career change, and there are a few people that would find it difficult to answer this question — Mark Zuckerberg (Facebook founder), David Karp (Tumblr founder), Evan Spiegel (Snapchat co-founder), Kevin Systrom and Mike Krueger (Instagram co-founders). Basically every new start up is founded by a millennial.

Can algorithms be racist, sexist, ageist etc.?

Let’s look at some examples

"Ape"

Flickr accidentally labeled black people as “apes”. That’s bad. 2 months later Google photos autotagged black people are gorillas. Really? You don’t see the media Flickr is getting and think maybe we should run some images of people of colour through your test?

"gorillas"

Cameras asking if Asian individuals are blinking…

Google image search results:

- Search for “woman” — see only white women

- Search for “men” — see only white men

- Search for “unprofessional hair styles for work” — see mostly women of colour, “while professional hair styles for work” is white women.

- Image search results for “CEO” show mostly white mean (even though women make up 27% of CEOs) and the first female result in the list? Barbie

You may have seen the UN Women’s campaign showing at the time, real autocorrect suggestions for Women shouldn’t and women need to…

Google as since removed this functionality

As it has removed some other blunders in the past.

Is this Google’s fault?

One could argue that Google is reflecting the attitudes of the world, that it is a mirror of the racism and sexism in the world. When should Google intervene like they have with the Women shouldn’t… example? If they did are they creating filter bubbles and skewing the results? I dont have the answers to these questions.

I’m not racist but… it’s my unconscious bias

In general Sexism, racism and other forms of discrimination are being built into the machine-learning algorithms that underlie these technologies.

The other week I found out that my algorithm is a racist

Nick didn’t intend it to be. He was running 2 creative sets, one with a while baby and another with a black baby. Whichever creative performed better, was displayed more. Overtime people clicked on the white baby, therefore it was shown more. It’s not clear if this is unconscious bias, over-racism or if one baby performed better for a reason other than race — maybe it was cuter, looking at the user and drawing more attention.

(dis)Ability

All I’ve spoken about so far is systems that aren’t designed for people who aren’t white men — over half the world. We haven’t even touched on the:

- 1 in 6 Australian’s affected by a disability

- 1 on 6 affected by hearing loss

- 30,000 totally Deaf

- Oover 350-thousand blind or low vision individuals

- Over 2 million individuals with dyslexia

- 2.6 million with a physical disability

Reducing biases in algorithms

How you train your algorithm will have a huge impact on whether biases are built in to it, especially in the case of machine learning algorithms that learn by being fed certain images.

Systems that favour white faces most likely weren’t written to be racist, but they fed certain images, often chosen by engineers or those readily available. If these are photos of people who are overwhelmingly white, it will have a harder time recognizing nonwhite faces.

You need to train on a wide set of data. This is the same for voice training — you need people with accidents and different pitches for the whole population to be able to use it

Test for emotion

In the case of Flickr & Google they could have easily brainstormed sensitive images to check that none of the codes were insensitive or hurtful — labeling dark skin individuals as ‘apes’ and ‘gorrillas’ and labeling Auschwitz as a gym.

How to become a better designer, and make better designs

Are users aren’t us

Always remember you are not the user. Slow down, step away from shortcuts and consider things with real, different from you, users in mind.

Test with all the people

You need to make sure to test designs with all different kinds of users, particularly people who are different from you in terms of age, gender, race, ability etc. to reveal issues early. Identify your assumptions and test with people who fall outside of those

If YouTube had of tested with left handed people, they would have caught the bug early.

Talk to yourself

One thing I like to do with copy is read it aloud to check that it works in a nice dialogue with a real user. If it’s not something you’d say to someone in real life, rework it. If it comes across condescending rework it. With the TypeForm example reading aloud the copy “allow the user to change his gender” would have revealed the unconscious bias that slipped in.

Change your system thinking

Move to system 1 thinking

2 * 2

What’s 2 times 2? 4. Easy

How about:

127 * 8

Panic. Palms sweaty. Eyes dialated. Math!

But once upon a time, 2*2 was hard. Just as driving is hard at first, but becomes easier over time to the point you can get half way to work and realise you haven’t been paying conscious attention, you can move things to system 1 thinking with practice.

Talk to strangers

You already get in cars with strangers, than you summon via your phone. Since Uber Drivers want a high rating they’re way more talkative than cabbies. Talk to them. Learn new things from them.

Turn off your senses, turn on empathy

By turning off your senses you can turn on your empathy and understand what it’s like for certain users.

Mute your voice

Every few weeks I like to go to the Tradeblock café at the Victorian Deaf College, where students work as part of their VCAL program. Even just ordering my meal without my voice is hard enough, and it makes you more empathic to users who are deaf or mute.

Black your ears

Through an unfortunate accident involving my work laptop and a banana my speakers no longer work. So I’ve found myself using closed captions on videos and seeing how bad that experience can be.

Close your eyes

I had this grand idea, inspired by Facebook’s 2G Tuesday’s, to use my computer one morning a week with the screen off and voice-over on. Most of the time I can’t last more than 10 minutes because it’s so difficult. It’s made me more passionate about making accessible designs.

The more you see different people, and understand what it’s like to live like them, the easier it becomes to recall them as per the availability heuristic and keep them in mind while designing.

Call people out

We all make these slips, and don’t realise what we are saying. In our team we’ve created a culture where we call it out, not really telling them off, but just to raise awareness.

I’ve noticed that when we are viewing anonymized user videos, there is a tendency to call people in technical roles a “he” and people looking for reception or nurse roles a “she”. I’m not perfect, I am definitely guilty of doing this too.

Surround yourself with different people

Think about your closest friends, or work friends. Are most of them the same gender as you? Similar age? Similar background, race, marital status? Probably. That’s the affinity bias, and there’s nothing wrong with that. But it’s good to expose yourself to different people, and there are plenty of opportunities to do this.

Sit next to someone next to you on public transport. Pick to stay at the AirBnB with someone who not only looks different to you, but in their bio mentions a different career and interests than yours.

The ketchup test

I can’t remember the source (sauce) but I heard someone refer to the ketchup test. Where do you keep your ketchup — in the fridge? In the pantry? Yeah you are correct. But nonetheless, if I keep my ketchup in the pantry and there isn’t any left I’m going to use BBQ sauce or something else from the pantry. But if you’re a fridge keeper you’ll reach for mayo or mustard. The options you see in front of you are different, depending on where you’re from, what you’re used to and what you’re doing. These different experience bring different perspectives to solving design problems.

Diverse Teams

If your team is homogenous what can you do to help change that? You make worse products with a mono culutre, particularly when it doesn’t match your audience.

Diverse recruitment panels

If you’re responsible for hiring, make sure you have a diverse recruitment panel — for example at least one female on the panel to reduce affinity bias effects.

Diversify your candidate pool

Ensure that you have a diverse candidate pool by making your ads gender neutral, to attract the most diverse candidates and then review the CVs of members from minority groups first.

Flexible hours and parent leave also work to attract more female candidates.

Merit based pay and promotions

All pay, promotions and performance reviews should be merit based. Managers making comments about a women’s performance should ask themselves “would I say this to a man” and sweat the small stuff

Affirmative action

Depending on the state of your team, you may want to take affirmative action and explicitly recruit for a female or aboriginal and Torres Straight Islander which is allowable as a special provision under the Equal Opportunity Act. Bringing equality is not discrimination.

Educate & evaluate yourself

It’s also worth evaluating and educating yourself on the unconscious biases you have and how to make sure these aren’t creeping in to your decision making. You can take an unconscious bias test online — I’d recommend Project Implicit out of Harvard but other websites are doing some create work with Implicit bias like Google and Facebook.

- •Implicit Bias Test — www.implicit.harvard.edu/

- •Re:Work from Google www.rework.withgoogle.com

- •Facebook Managing Bias www.managingbias.fb.com

We have a duty to disagree, to point out unconsidered assumptions and possible failure states. We need to point out when something might come off as insulting, insensitive or hurtful. We have to bring that users view to the design process to make things easier for them. Be the advocate for more empathetic and inclusive design, even if it doesn’t always make things organizationally easy for ourselves. It is the right thing to do. For the greater good.

Inspiration for this talk from:

Fryer Jr, R. G., & Levitt, S. D. (2004). The causes and consequences of distinctively black names. The Quarterly Journal of Economics, 767–805.

McGinn, Kathleen L., and Nicole Tempest. “Heidi Roizen.” Harvard Business School Case 800–228, January 2000. (Revised April 2010.)

Gladwell, M. (2007). Blink: The power of thinking without thinking. Back Bay Books.

Thinking Fast & Slow — Daniel Kahneman

The world is designed for Men — Kat Ely

The social lives of algorithms — Professor Paul Dourish